About the Customer

Karaca Home, a brand of Karaca, a prominent Turkish porcelain company, has rapidly gained acclaim in the home textile industry since its launch in January 2012. Known for combining aesthetics and quality, it became Turkey’s top home textile brand within six months of 2013 and has maintained its success, earning accolades like the ‘Most Successful Brand of the Year in the Home Textile-Decoration Sector’ at The One Awards 2016. Internationally recognized, it won the Martin M. Pegler Award for Visual Editing Excellence at the 2016 Global Innovation Awards.

Offering a wide range of products like duvet sets and towels made from high-quality materials, Karaca Home’s designs are created by international and Istanbul-based designers. As of 2020, it operates nearly 493 dealerships, 106 franchises, and 20 stores in Turkey, with a production facility in Denizli and collaborations in Usak and Bursa, employing about 202 people.

Customer Challenge

Karaca had containerized backend applications that were running at on-premises.

Our client, operating in the technology sector, seeks to optimize their Kubernetes management and deployment process in Amazon Elastic Kubernetes Service (EKS). The primary goal is to enhance automated deployment, multi-tenancy, workload scheduling, and cluster management while adhering to best practices for security and efficiency.

The customer experienced challenges in scaling on-premises resources to meet fluctuating demand for special shopping days. AWS offered the flexibility to seamlessly adjust resources based on our application needs, ensuring optimal performance without the complexities of managing physical infrastructure.

The customer faced upfront capital expenses and difficulties in optimizing costs with their on- premises infrastructure. AWS’s pay-as-you-go model provided a cost-effective solution, allowing us to pay only for the resources consumed and avoid unnecessary financial commitments.

Managing Kubernetes on-premises presented complexities in deployment and orchestration. AWS’s fully managed container orchestration service EKS, simplified the management of our containerized applications, reducing the operational overhead.

How the solution was deployed to meet the challenge

The delivery of the solution was planned in two main phases: discovery and implementation.

The findings were documented with architectural recommendations during the discovery phase. Implementation phase as the name implies, was the phase when the agreed solution was deployed.

The solution’s components were:

- Implementing Blue/Green deployment strategy to apply zero-downtime deployments in Kubernetes cluster.

- Utilize Terraform for infrastructure deployment. Store Terraform scripts in version control with state files in an AWS S3 Bucket. Ensure thorough testing in a pre-production environment before production deployment.

- Store Kubernetes configuration files in a GitHub repository, manage continuous integration through AWS CodePipeline, and use ArgoCD for continuous deployment.

- Employ Kubernetes namespaces and RBAC for soft multi-tenancy, providing logical isolation while maintaining resource efficiency and scalability.

- Implement strategies for pod scheduling based on workload requirements. Utilize a blue/green strategy for upgrading EKS clusters and add-ons.

- Deploy security tools like seccomp for container protection and Trivy for image scanning. Use Prometheus and Grafana for monitoring and observability.

Third party applications or solutions used

RabbitMQ: It is installed on the Kubernetes cluster to facilitate reliable message queuing and processing. Its deployment within Kubernetes ensures high availability and scalable message processing capabilities, crucial for effective communication and task distribution among various components of the cluster’s microservices architecture.

Istio: Deployed via Helm, Istio is the chosen service mesh solution for the Kubernetes environment. It manages the configuration of the service mesh and facilitates dynamic service discovery and routing, enhancing network efficiency and security.

Trivy: It is integrated as a vulnerability scanner for container images, ensuring the security of the Kubernetes cluster. Installed directly within the Kubernetes environment, Trivy scans container images for vulnerabilities as part of the CI/CD pipeline.

Terraform: The company uses Terraform for infrastructure as code (IaC) to automate the deployment of their cloud infrastructure on AWS. This tool enables them to efficiently manage and version their infrastructure setup, with Terraform scripts stored in a version control system for better collaboration and tracking.

Argo CD: It is deployed within the Kubernetes cluster to automate the deployment of applications. It synchronizes applications defined in a Git repository with the Kubernetes cluster, ensuring that the application state in the cluster matches the intended state in the repository. Its presence in the Kubernetes environment streamlines the deployment process, making it more consistent and transparent.

Grafana: It is deployed on Kubernetes cluster to provide insights into the metrics and performance data of the cluster and its applications, the team can create and manage dashboards that visualize data from multiple sources to monitor the health and performance of their Kubernetes environment in real time.

AWS Services used as part of the solution

S3 is used for storing Terraform state file and Code Pipeline artifacts. EC2: is used as EKS nodes.

EBS is used for EKS nodes volume.

IAM is used for managing users and secrets in AWS.

Lambda is used for scheduling EKS nodes.

EKS is used for Kubernetes cluster management.

ELB is used for allowing access to the services exposed in EKS.

WAF is used for accept/reject requests to ELB based on specific rules. Code Pipeline: is used for running Continues Integration pipeline.

Code Build is used for SonarQube scanning and building docker images. ECR: is used for storing container images build by Code Build

VPC is used for defining virtual network for the workloads in AWS account. ElastiCache: is used for applications deployed in EKS.

Secrets Manager is used for storing sensitive credentials.

CloudWatch is used for collecting metrics and logs.

OpenSearch is used for applications deployed in EKS.

EFS is used for EKS as persistent volume.

Outcome(s)/results

Following the successful implementation of an AWS EKS solution, Karaca has experienced significant improvements in their cloud infrastructure. The shift to AWS EKS has enhanced scalability, allowing for effortless adaptation to changing demands and Karaca AWS environments have been replaced with scalable, cost efficient, and reliable AWS services for compute service with microservices, CI/CD pipelines.

Architecture Diagrams of the specific customer deployment

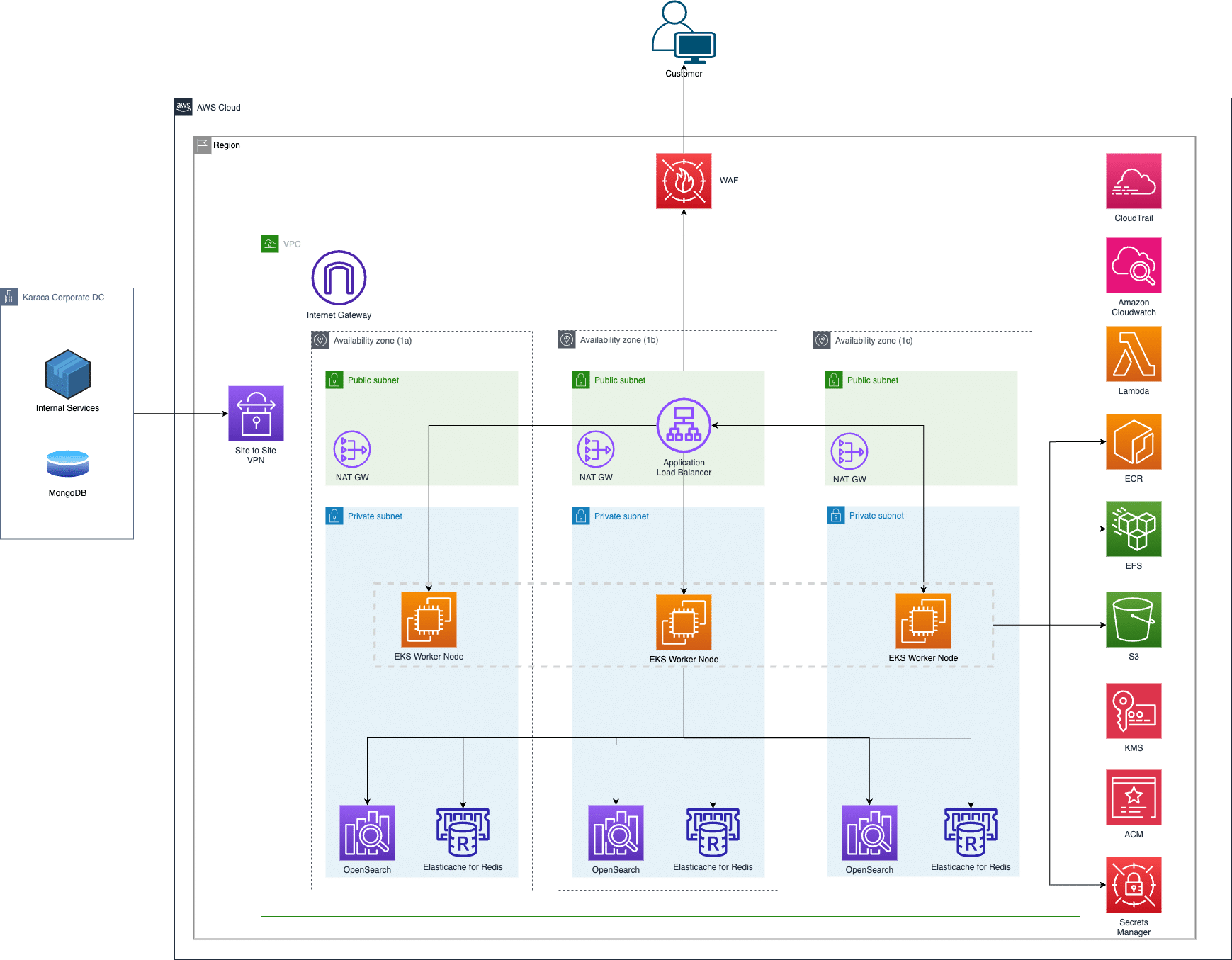

In the Karaca Prod Workload architecture diagram, a robust and secure cloud infrastructure is depicted, showcasing the integration of on-premises resources with AWS cloud services. The customer’s LAN is seamlessly connected to AWS through a Site-to-Site VPN, ensuring secure and reliable access. The deployment and updates are handled via AWS CodePipeline, enhancing the CI/CD process by pushing containerized images to the Elastic Container Registry (ECR). These applications are then orchestrated on AWS EKS, which manages EC2 instances, balancing the load with an Elastic Load Balancer and bolstering security with WAF. For enhanced performance, the architecture integrates AWS ElastiCache and Secrets Manager, alongside OpenSearch services, for efficient data retrieval and secure management of sensitive information. System health and performance monitoring are meticulously handled by CloudWatch and Grafana, providing a comprehensive view of the system’s metrics and logs.

Technical Requirements

High level requirements were defined as:

- Establish a secure Site-to-Site VPN Connection between Karaca’s corporate data center and AWS cloud environment to ensure secure and reliable data transfer.

- Implement AWS Code Pipeline to automate the CI/CD process, facilitating consistent deployment and updates of containerized applications.

- Implement AWS EKS to manage and orchestrate containerized applications on EC2 instances, ensuring efficient load distribution and application scalability.

- Integrate ElastiCache to improve application performance through in-memory caching.

- AWS Secret Manager to handle secrets needed for the application that provides a secure method to store and manage sensitive information.

- Deploy AWS OpenSearch to search and analytics capabilities to applications, enabling quick access to insights from data.

- Integrate WAF to protect applications from common web exploits and ensure security.

- Utilizing an ELB to distribute incoming application traffic across multiple EC2 instances while providing performance and fault tolerance.

Client Case Studies

We deliver solutions using our extensive knowledge and more than 10 years of cloud transformation experience.