Reinforcement Learning Meets with Wheels:

AWS DeepRacer

How to implement and practice Reinforcement Learning by using AWS DeepRacer

Imagine a race car having a 1/18th scale with a “monstrous” design that is capable of being controlled and modeled via Amazon Cloud Solutions by applying the reinforcement learning model. We call it as AWS DeepRacer.

Reinforcement Learning (RL) focuses on developing a reward triggered numerical structure to tackle the issues to be able to specify which actions lead closer to the desired output. A vast amount of RL models are applied to real-life problems with the help of a physical robot as an agent to actualize the instructions that are fed by the model. In this post, we will be using AWS DeepRacer to deep dive into reinforcement learning details and AWS DeepRacer capabilities in its field.

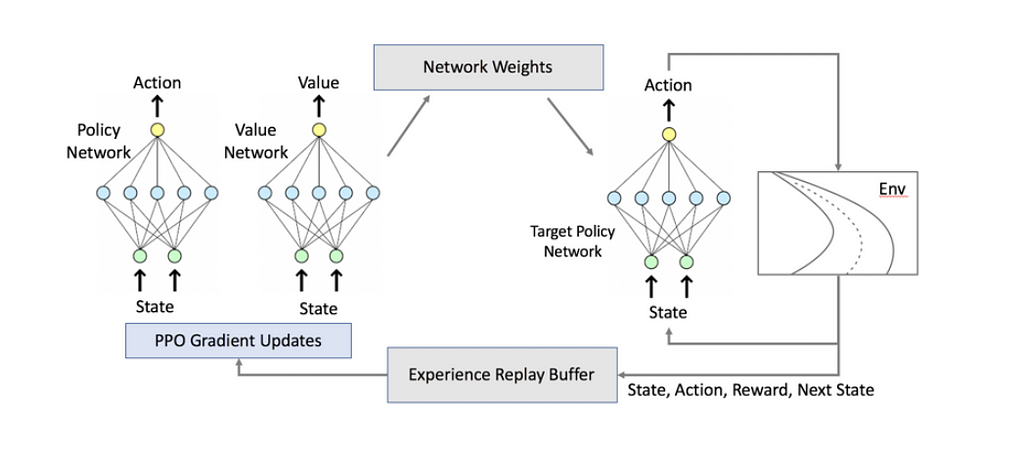

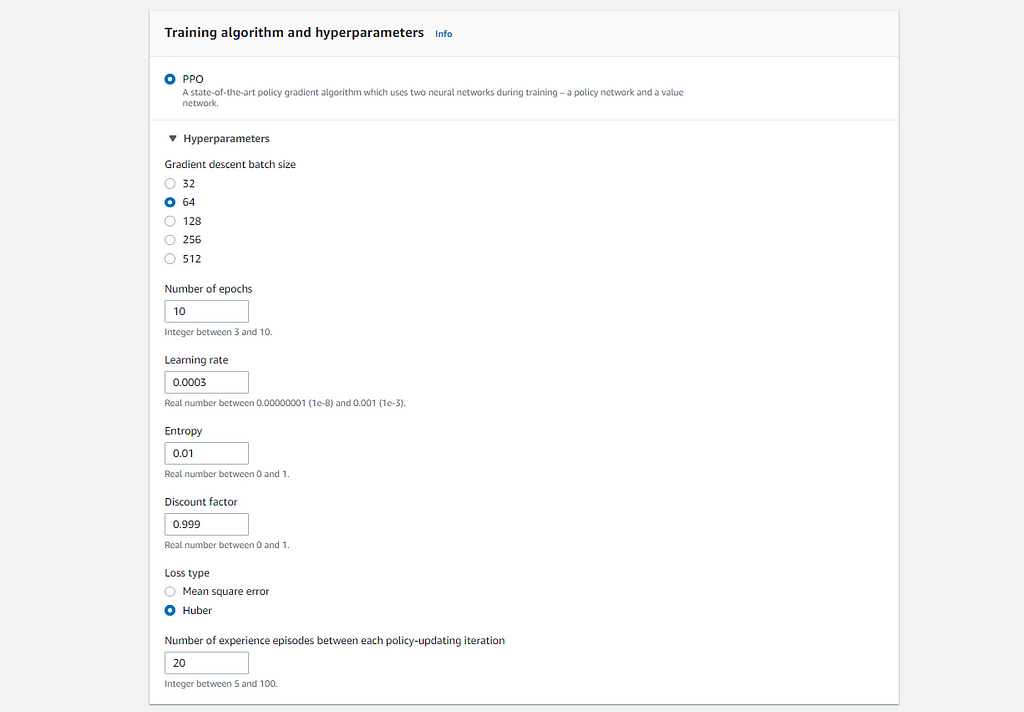

In the training step, Proximal Policy Optimization Algorithm (PPO) which is empowered by both policy and value networks is used as a model for reinforcement learning in AWS DeepRacer. PPO algorithm can be accepted as a recently released algorithm by OpenAI that is mostly used in robotics and 3D locomotion fields.

Technical Requirements

Inside of AWS DeepRacer, Intel Atom® Processor as a CPU, Ubuntu OS-16.04 LTS as OS, 7.4V/1100mAh lithium polymer as a car battery, 13600mAh USB-C PD as computer battery. In addition to these features, it has 4 MP camera with MJPEG, 4GB RAM, 32GB expandable memory, 802.11ac Wi-Fi, integrated accelerometer and gyroscope, Intel OpenVINO toolkit and ROS Kinetic. Below, you can find its dimensions, audio out, micro HDMI display port, and USB ports in a depicted way.

Currently, it can only be shipped to these eight countries: US, UK, Germany, France, Spain, Italy, Canada, Japan. If you are outside of these 7 countries, you might consider ordering one of these countries and pick it up from this location.

How to Configure Your Vehicle

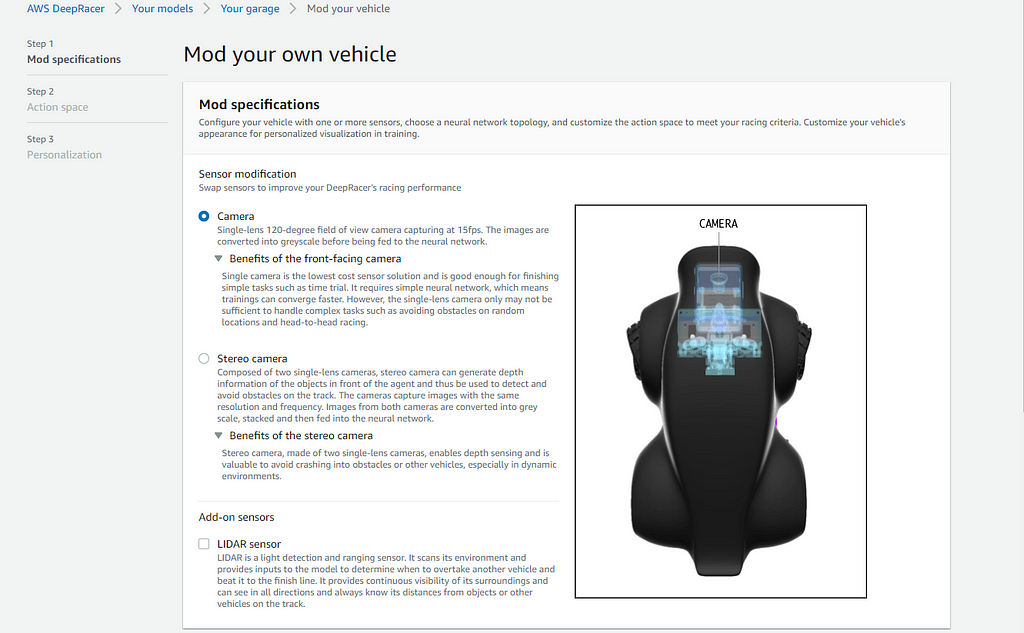

There exists a “Garage” component of the AWS DeepRacer console, where you can simply select to customize your agent/vehicle with supported sensor units, an action space, a neural network topology, and a customized appearance for training RL models to run on a DeepRacer vehicle. In other words, you can build or maintain your agent/vehicle in “Garage” to meet the requirements of your reinforcement learning powered autonomous model. “Garage” console can be found under Your models > Your garage > Mod your vehicle.

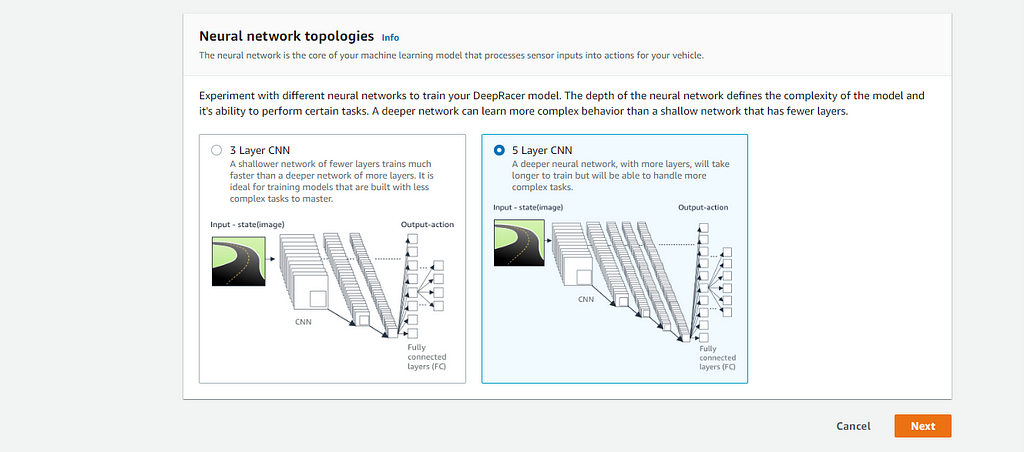

After specifying the camera option, the neural network topology selection can be chosen by considering the pros and cons of their corresponding convolutional neural network layer capabilities.

How to Create Your First Autonomous Drive Model with AWS DeepRacer

There exists a 90-min free online course called AWS DeepRacer: Driven by Reinforcement Learning for the ones who have beginner level machine learning knowledge.

By using the AWS console you can start to learn how to train your first machine learning model by log into AWS DeepRacer. As a first step, account creation with proper valid IAM roles is required to be able to access DeepRacer resources.

As a second step, you need to create a model to race, simply click on the Create button to be redirected to the model creation page.

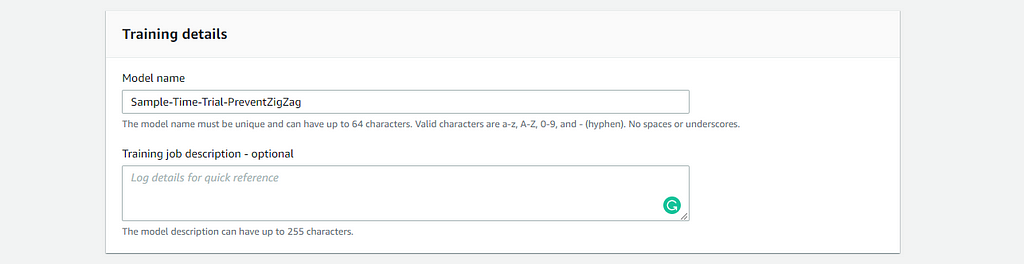

On the “Create model” page, the first step is to fill the “Model name” field. Optionally, you can also type the description of the model to guide you about the details up to 255 characters.

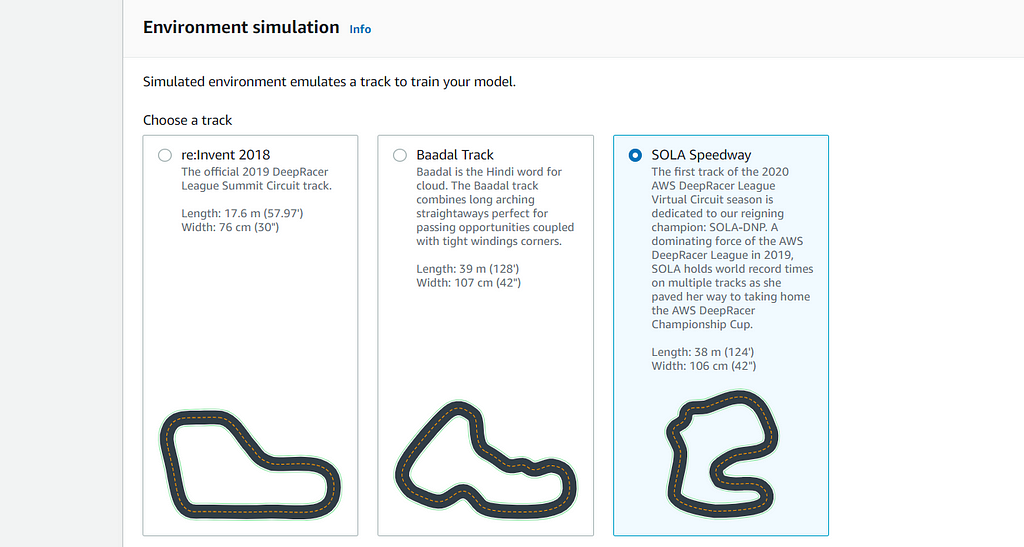

As a next step, an environment simulation selection shall be made from the “Choose a track” list of tracks for DeepRacer to race. For each environment, you can view its details depicted after the track heading.

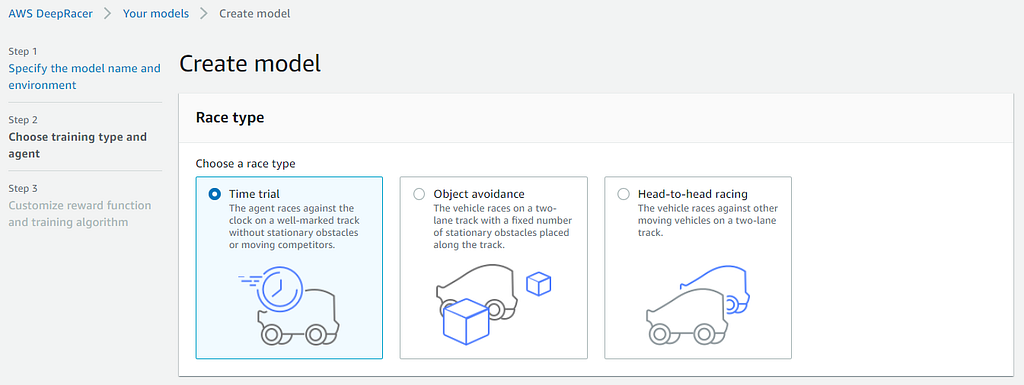

After you choose your environment, “Race type” shall be chosen by considering all three different types. The first type is called “Time trial” which time is considered as an effective parameter for DeepRacer to finish the race successfully.

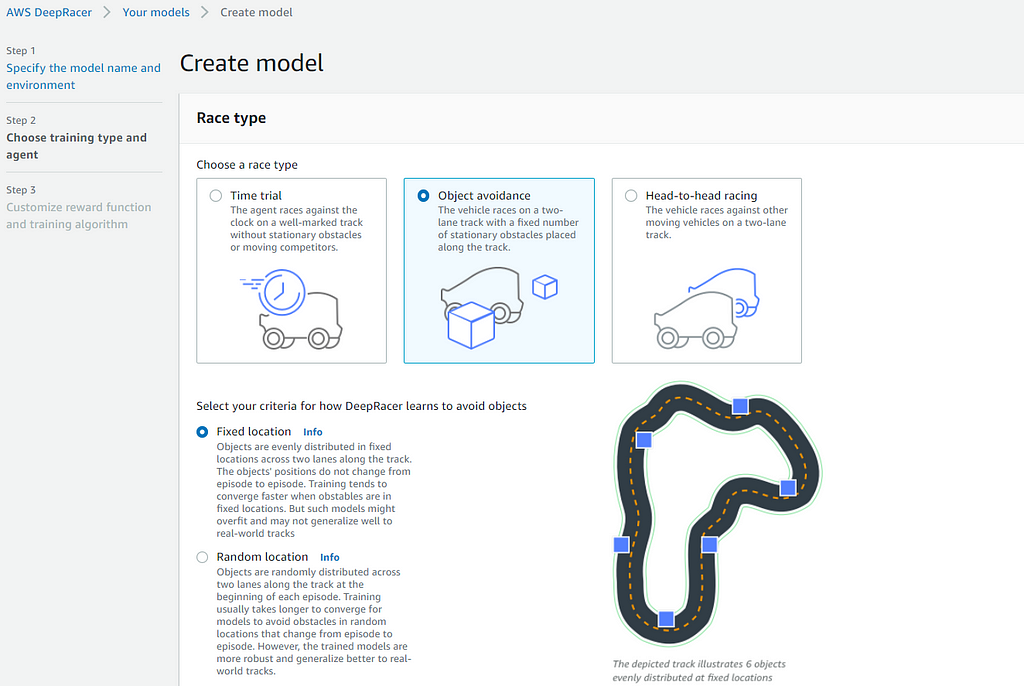

The second type is called “Object avoidance” in which the agent considers obstacles as an effective parameter for DeepRacer to finish the race successfully.

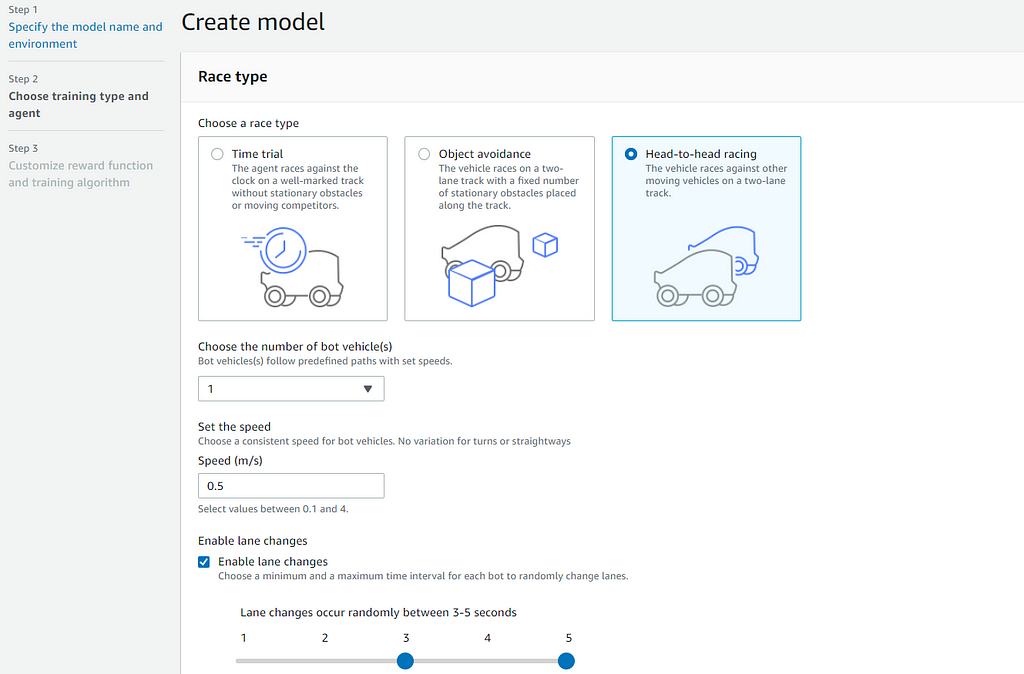

The third type is called “Head-to-head-racing” in which the agent competes with other moving agents as on a two-lane pathway aiming to finish in a lesser amount of time compared to another vehicle. The number of agents to be raced against can be increased up to 4. Also, speed values for agent vehicles can be adjusted between 0.1 and 4. In addition, lanes can be enabled to change between 1–5 seconds.

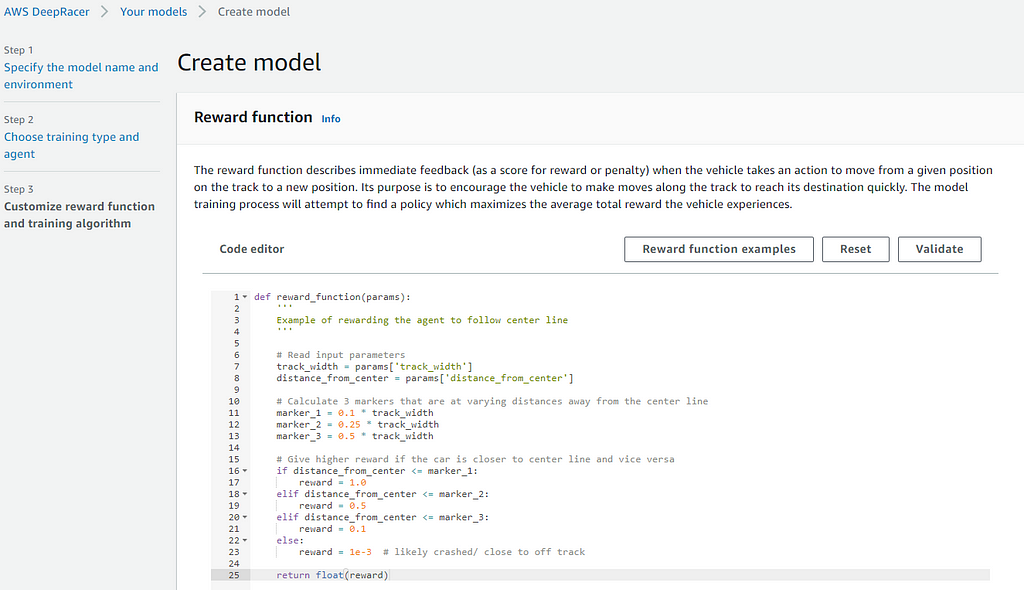

After choosing “training type” and “agent”, you can continue by clicking editing reward function. The following reward function belongs to the “Time trial” environment’s default generated code snippet. It can easily be edited and then validated by pressing the “Validate” button.

After editing the reward function, you can scroll down to view the “Training algorithm and hyperparameters” in order to specify the “number of epochs”, “learning rate”, “entropy”, “discount factor”, “loss type”, and “experience episodes”. If not edited, default values will be applied as follows.

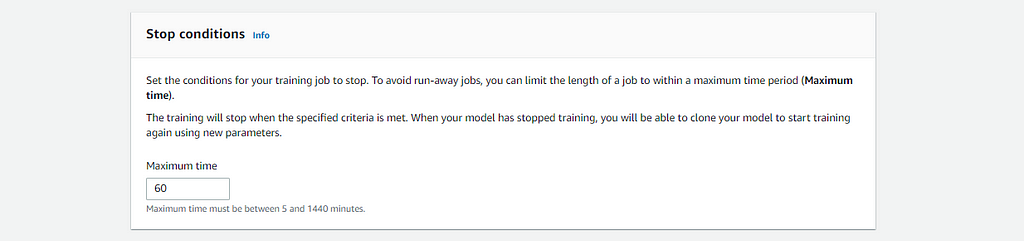

In addition to the hyperparameter selection area, you can also adjust stopping conditions for your training job. By default, the maximum time is set to 60 minutes which can be changed between 5 and 1440 minutes.

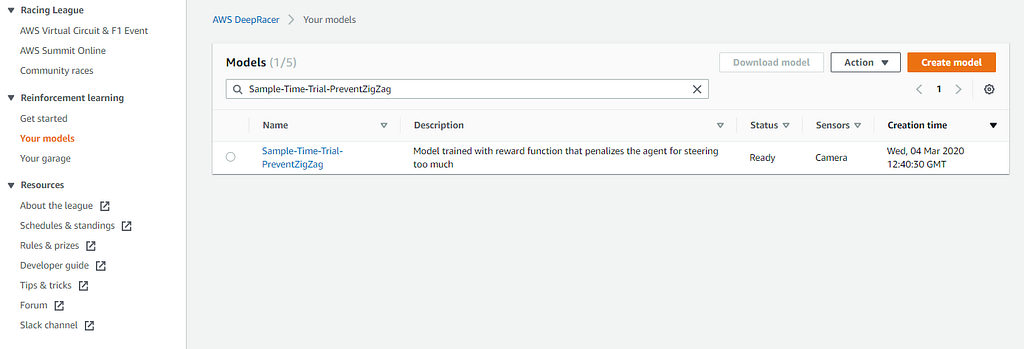

When you finish editing model creating details, you can simply click on “Next” to continue. If your model successfully created, you must be viewing it under the “Your Models” section of “Reinforcement learning”.

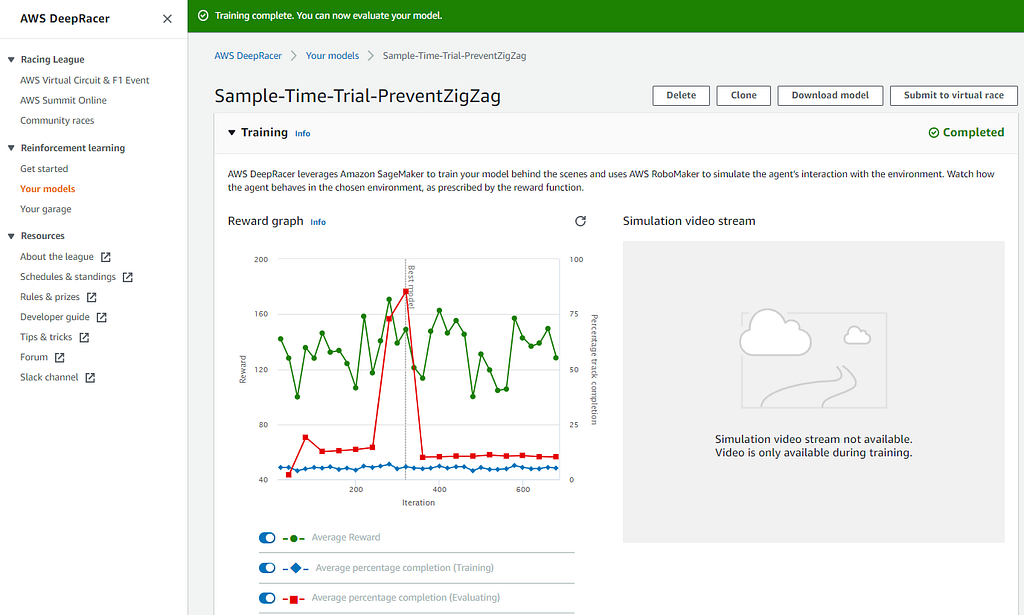

When you click on your model, you can view your model’s details by clicking on it. After the training job stops, you can easily begin to evaluate the trained model by having the agent race against the clock along a chosen track in simulation.

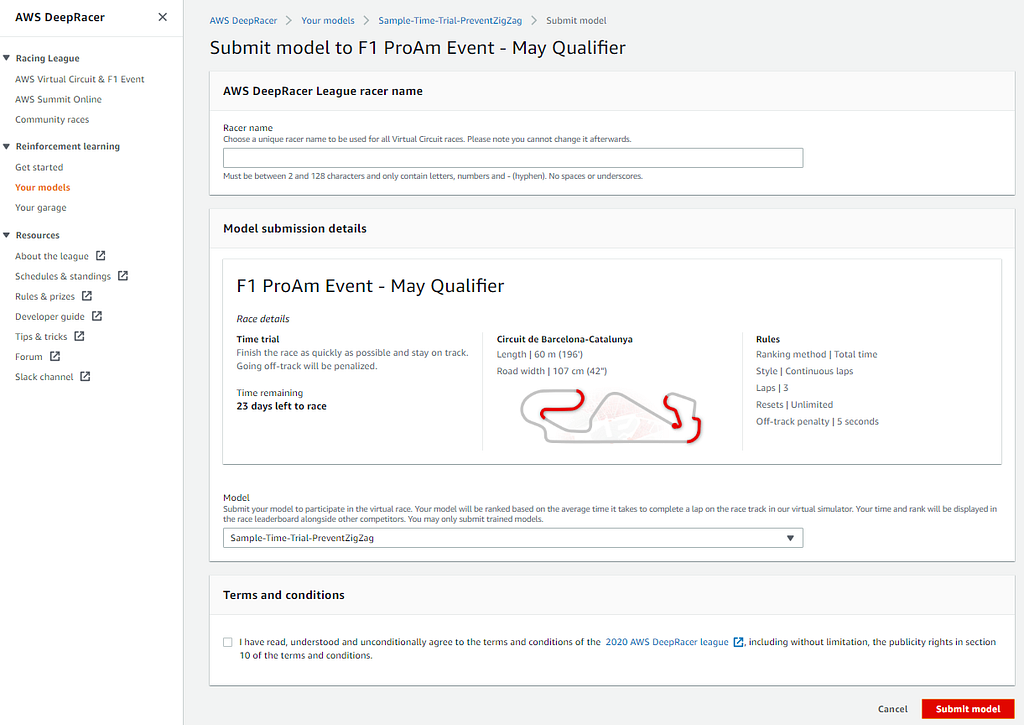

After you completed your training and evaluation processes, you can easily submit your model to a race by clicking on the “Submit model” at the right bottom of the page.

Types of Races

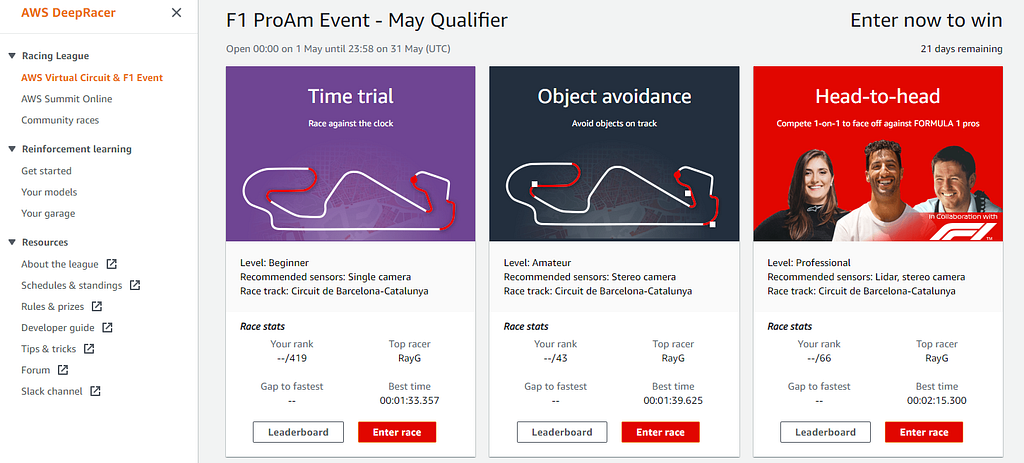

After finishing the training job of the autonomous drive model, currently, there are three types of leagues for participants to join and race their vehicles.

AWS Virtual Circuit

Participants can join the AWS DeepRacer League to race their vehicles online by using the AWS DeepRacer console independent from their currently locating country. The league hosts time trial, object-avoidance, and head-to-head environments. For more detailed information, the official video for the Virtual Circuit can be watched.

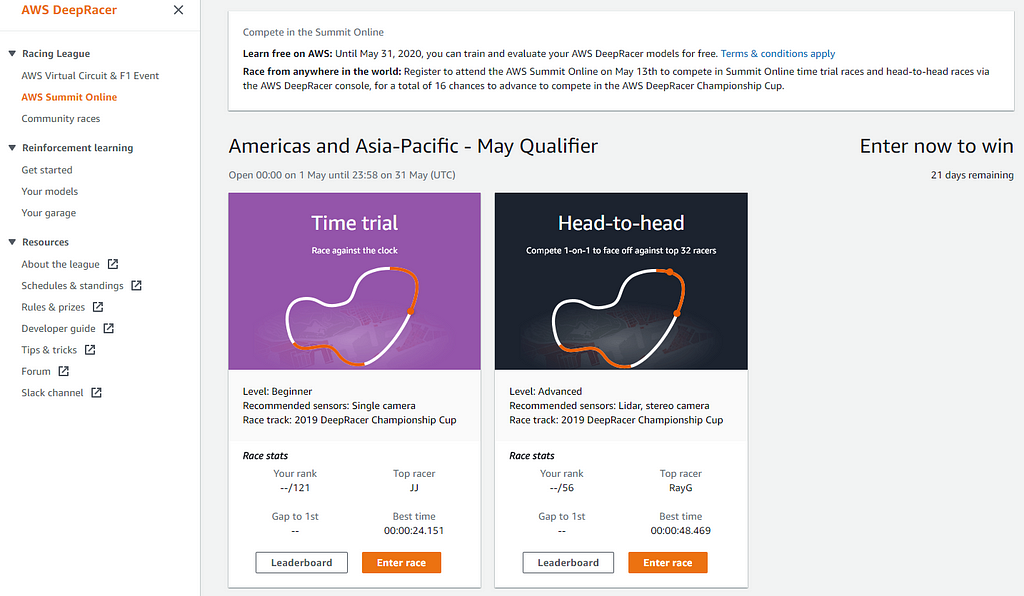

AWS Summit Circuit

Participants can join the AWS Summit or AWS Summit Online event to race their vehicles in the summit or online in 2020 from all around the world. The league hosts time trial, and head-to-head environments. For each round trial and head-to-head races, the top-scored participant will be awarded and their chance of joining Championship Cup will be increased. For more detailed information, the official video, and official rules for the summit can be watched.

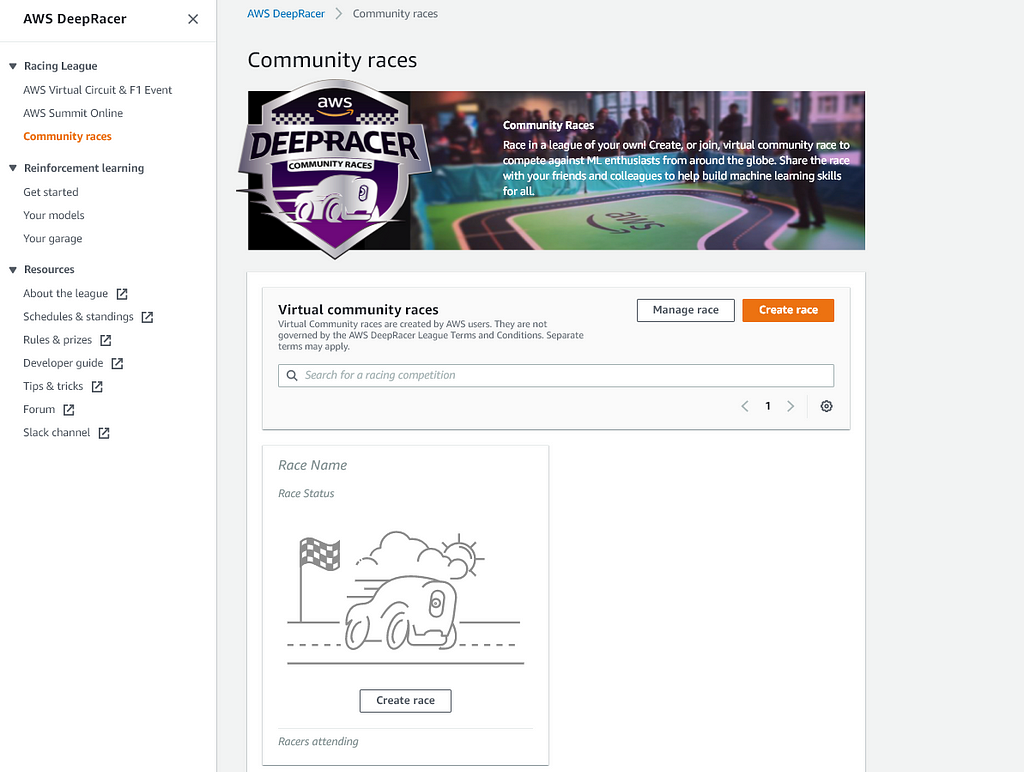

Community Races

Participants can create their own league to race their vehicles to find an opportunity to connect and meet with other machine learning experts by adding them to their privately created league. This self-made league might host time trial and head-to-head environments. For more detailed information, the official page for the community race can be viewed.

Questions and comments are highly appreciated!

References:

- AWS DeepRacer

- AWS DeepRacer League

- AWS DeepRacer Virtual Circuit

- AWS Summit

- AWS DeepRacer Community Race

Reinforcement Learning Meets with Wheels: AWS DeepRacer was originally published in Commencis on Medium, where people are continuing the conversation by highlighting and responding to this story.

Reading Time: 7 minutes

Don’t miss out the latestCommencis Thoughts and News.