A Comprehensive Guide to A/B Testing for QA Engineers

In today’s competitive digital landscape, understanding user behavior and optimizing conversion rates is essential for success. A/B testing is a powerful tool that enables businesses to make informed, data-driven decisions. But how do QA engineers contribute to this process, and what are the key details of A/B testing? Let’s explore together.

What Is A/B Testing?

A/B testing is an experimentation process where two variations (A and B) of the same content are shown to different user groups to determine which performs better. It is widely used in areas such as website design, advertising campaigns, email subject lines, and app features.

For example, an e-commerce site might test different “Buy Now” button colors to see which results in more clicks. This approach reduces the risks of guesswork and ensures decisions are based on real data.

How to Perform A/B Testing?

1. Define Your Goal

Identify the metrics you aim to optimize, such as click-through rate, sign-ups, or sales.

2. Form a Hypothesis

Decide what changes might positively impact your goals. For instance, a new call-to-action (CTA) might encourage more users to engage.

3. Create Variations

Design one or more alternative versions to compare against your current design (the control).

4. Run the Test Using Tools

Use platforms like Google Optimize, Optimizely, or Visual Website Optimizer to split traffic between your variations.

5. Analyze the Results

After running the test, review metrics like conversion rates or engagement levels to identify the winning variation.

The Role of QA Engineers in A/B Testing

QA engineers play a critical role in ensuring the smooth execution of A/B tests by:

- Test Planning and Scenario Preparation: Understand the scope, objectives, and variations to be tested in the A/B test. Create test scenarios and clarify which metrics will be measured.

- Setting Up Test Environments: Preparing the testing infrastructure to ensure experiments run correctly and verify that users are directed to the correct variation.

- Validation: Confirm that A/B test variations function correctly (UI, functionality, performance). Check if data collection tools (e.g., Google Analytics, Optimizely) are recording accurate data.

- Monitoring Test Quality: Monitor for anomalies or unexpected behaviors during the test. Analyze data to help determine the winning variation.

- Analyzing Results: Statistically evaluating outcomes and offering actionable insights for future improvements.

By working closely with product teams and analysts, QA engineers ensure that A/B tests align with business objectives and deliver valuable insights.

Test Automation and A/B Testing

A/B testing, particularly for large user groups, can be challenging to execute manually. This is where test automation comes into play.

- Automation of Test Cases: Automation cases are written to check the correctness of A/B test variations for minimizing manual testing.

- Data Collection and Validation: Testing that analytical integrations work correctly with automation tools. Also, verifying which variations users are directed to and that the correct data is collected.

- Use automation tools to verify that page-specific or event-based tags are firing correctly. For example, ensure a “Button Click” event is sent to Google Analytics under the correct category and attributes.

- Verify that the parameters used in data transmission (e.g., eventCategory, eventAction, userId) are accurate and complete.

- Ensure that tracking codes for A/B test variations are functioning correctly for each variation. For instance, verify that users in ”Variation A” are tagged with the correct experiment_id.

- Regression Tests: Detecting regression errors that may affect the performance and functionality of the main system during A/B testing.

- Performance Tests: Its goal is to ensure both variations load quickly and meet performance benchmarks, detect bottlenecks or slow-loading components in specific variations (e.g., images, scripts, or 3rd party integrations). Page load time, time to interactive (TTI), script execution time, API response time are some key metrics for performance testing at that point.

- Load Tests: Its aim is to determine whether the variations can handle expected or peak traffic without crashing or degrading performance. Simulating a high number of users accessing each variation simultaneously and testing the backend systems’ ability to process increased A/B testing-related data are some key scenarios. In addition to these, throughput, response time, error rate, and system resource usage are key metrics.

Popular A/B Testing Tools

Some of the most widely used tools for A/B testing include:

- Optimizely: Offers advanced features and a user-friendly interface.

- Google Optimize: Fully integrated with Google Analytics for in-depth analysis.

- VWO (Visual Website Optimizer): A robust platform for test creation and data evaluation.

- Adobe Target: Provides powerful personalization and testing capabilities.

These tools often include integration, and automation features to streamline workflows for QA engineers.

Real-World Examples

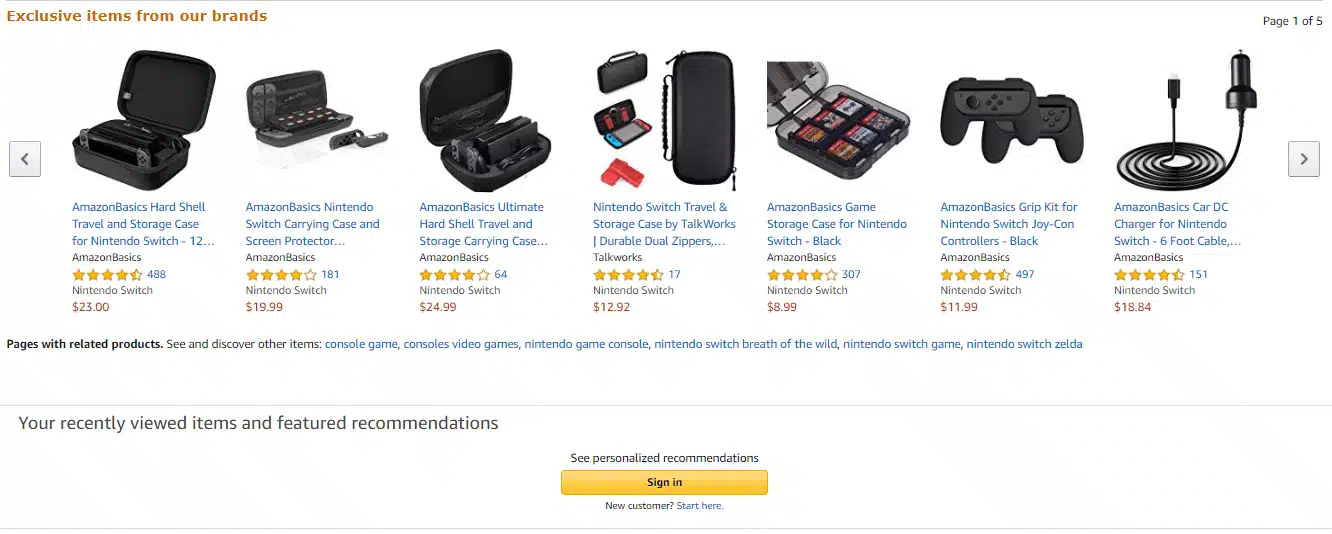

Amazon: Improved conversion rates by testing button designs and layouts. This example is from NELIO Software.

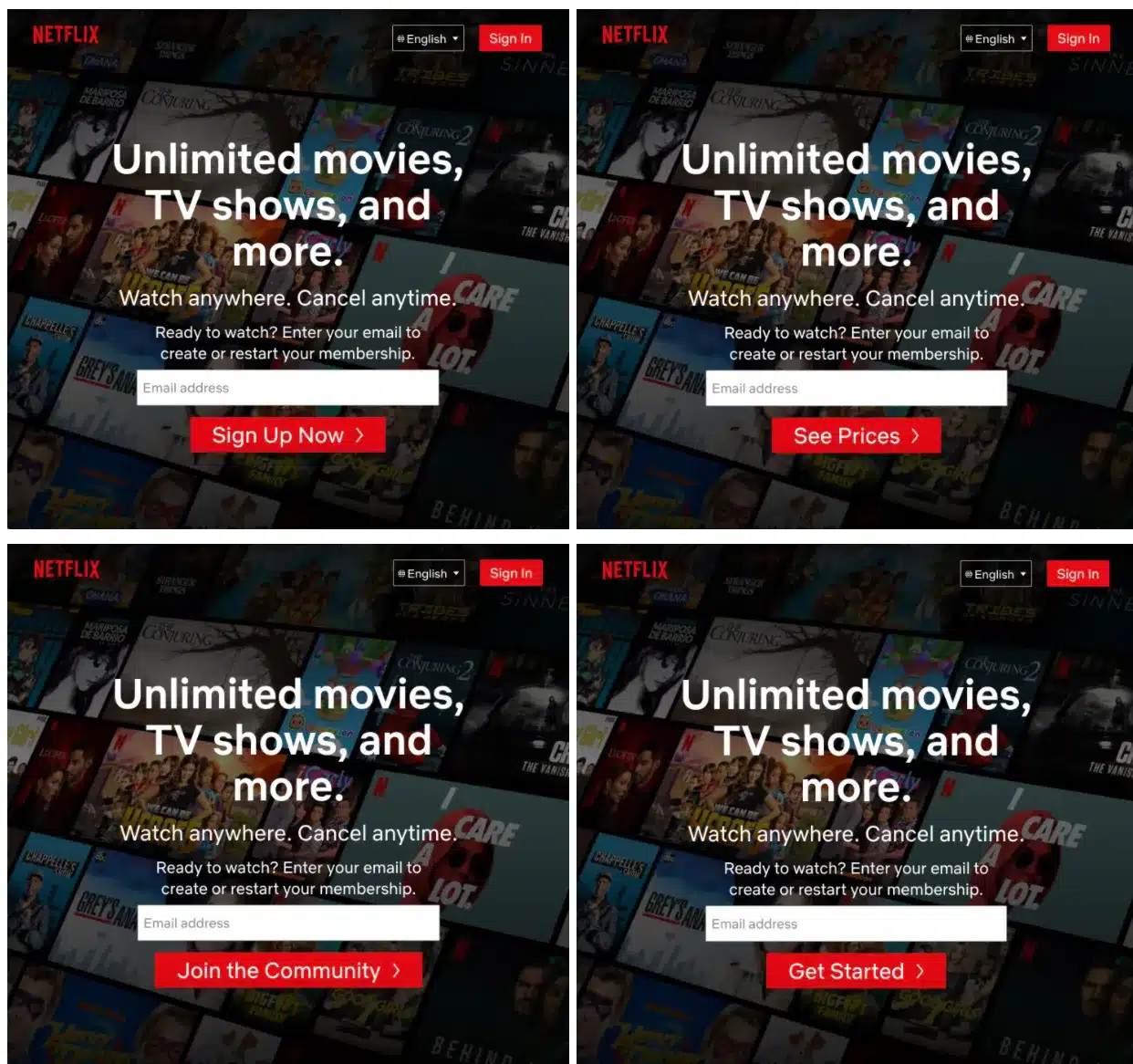

Netflix: Netflix uses A/B testing to test and refine its user interface. For example, from the Netflix Tech Blog, in 2019 the company tested a new feature that allowed users to shuffle episodes of a TV show or watch them in order. In 2021 it tested various button texts to subscribe. Netflix uses A/B testing to improve its content recommendation algorithm. For example, in 2018 the company tested a new feature that allowed users to hide titles they didn’t want to see in their recommendations.

Below result of example is ended up productizing “Get Started” — although due to the nature of A/B testing, they are keeping on experimenting to see if we can find a higher-performing result!

Key Takeaways

A/B testing stands as the cornerstone of modern product development and user experience optimization. QA engineers play a pivotal role in this dynamic process, bridging technical expertise with analytical insights to ensure experiments are executed flawlessly and deliver actionable results.

By leveraging the strategies and tools discussed in this guide, your team can harness the full potential of A/B testing. From hypothesis formation to automated validation and performance monitoring, every step contributes to making data-driven decisions that resonate with users. Incorporating A/B testing into your workflow not only refines user experiences but also drives measurable business outcomes.

Embrace the iterative power of A/B testing to innovate confidently and stay ahead in the competitive digital landscape!

Reading Time: 4 minutes

Don’t miss out the latestCommencis Thoughts and News.

Cemrenur Şılbır

QA Engineer